We value your privacy

We use cookies to enhance your browsing experience, serve personalized ads or content, and analyze our traffic. By clicking "Accept All", you consent to our use of cookies.

We use cookies to help you navigate efficiently and perform certain functions. You will find detailed information about all cookies under each consent category below.

The cookies that are categorized as "Necessary" are stored on your browser as they are essential for enabling the basic functionalities of the site. ...

Necessary cookies are required to enable the basic features of this site, such as providing secure log-in or adjusting your consent preferences. These cookies do not store any personally identifiable data.

No cookies to display.

Functional cookies help perform certain functionalities like sharing the content of the website on social media platforms, collecting feedback, and other third-party features.

No cookies to display.

Analytical cookies are used to understand how visitors interact with the website. These cookies help provide information on metrics such as the number of visitors, bounce rate, traffic source, etc.

No cookies to display.

Performance cookies are used to understand and analyze the key performance indexes of the website which helps in delivering a better user experience for the visitors.

No cookies to display.

Advertisement cookies are used to provide visitors with customized advertisements based on the pages you visited previously and to analyze the effectiveness of the ad campaigns.

No cookies to display.

Modern microwave ovens have become indispensable kitchen appliances, providing quick and convenient cooking solutions for busy households. However, like any electronic device, microwaves can develop problems over time that affect

This article explores why Guess eyeglasses stand out and how you can find the perfect pair to complement your look.

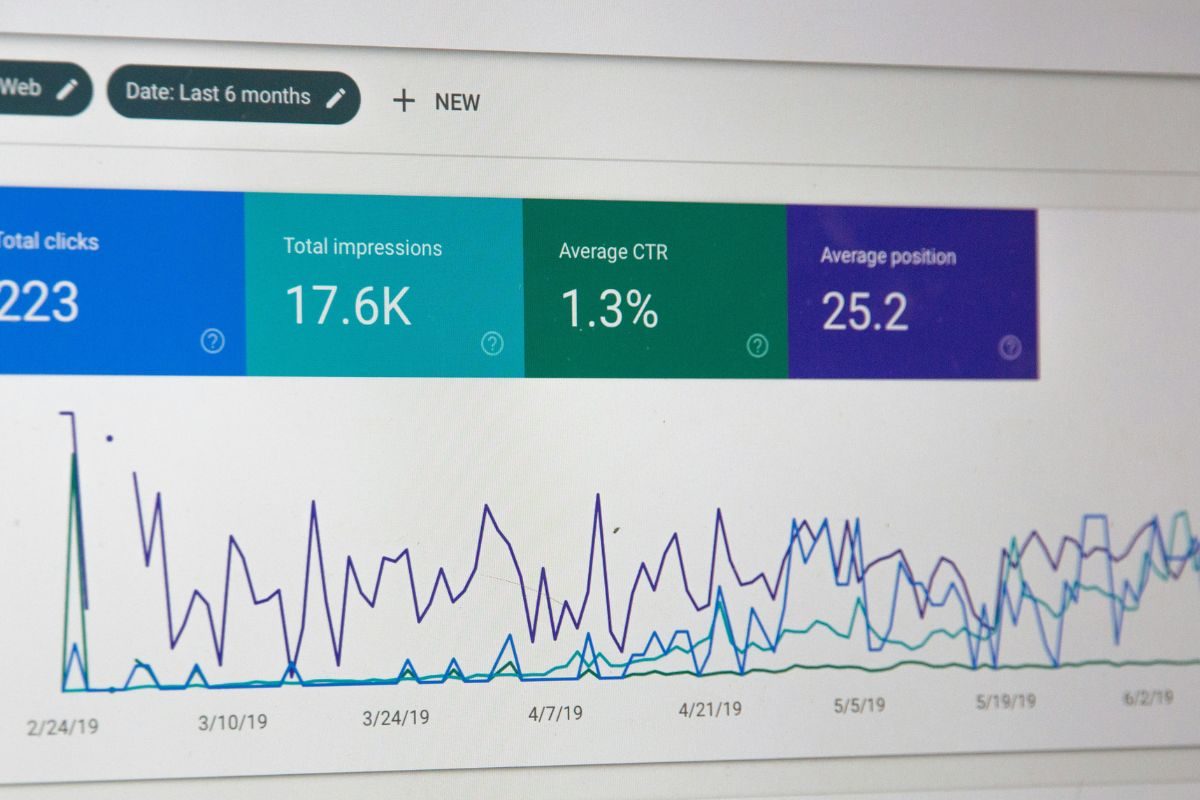

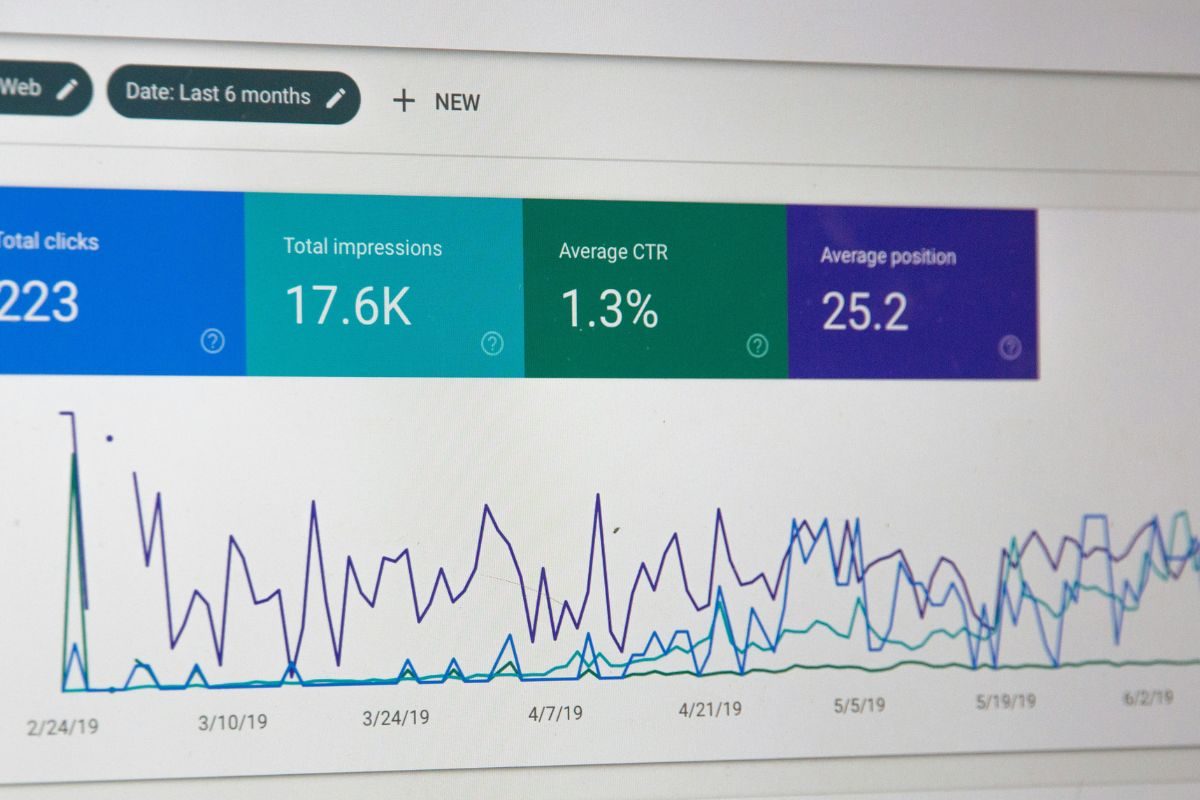

Discover how hiring a local SEO company in Kolkata can improve your online visibility, attract nearby customers, and grow your business faster.

Let’s be honest—everyone dreams of clear, glowing skin. We spend hours scrolling through skincare tips, trying out new products, and chasing that “glass skin” glow. But somewhere in that search,

Godrej Alira Sector 39 Gurgaon is offering spacious, secure, and luxury apartments designed for growing families, with top schools and hospitals nearby.

When it starts to malfunction, you’ll notice the effects right away: inconsistent temperatures, poor airflow, and a rise in energy costs.

The global Microscopy Device Market is undergoing a dynamic transformation, driven by an ever-expanding need for high-resolution imaging across healthcare, academia, and industry. As imaging needs become more sophisticated, the

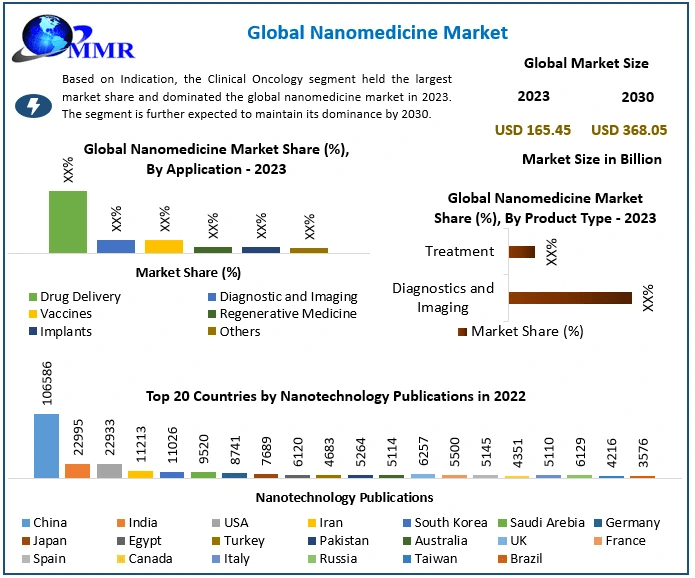

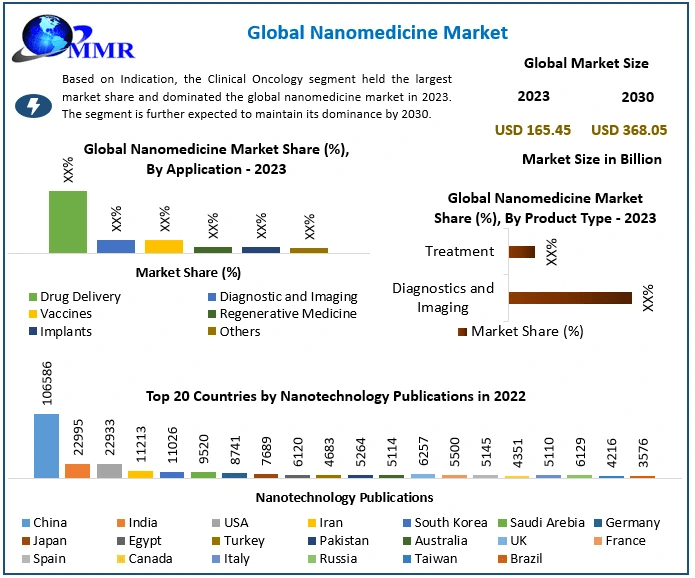

The Nanomedicine Market size was valued at USD 165.45 Billion in 2023 and the total Nanomedicine revenue is expected to grow at a CAGR of 12.1% from 2024 to 2030, reaching nearly USD 368.05 Billion by 2030.

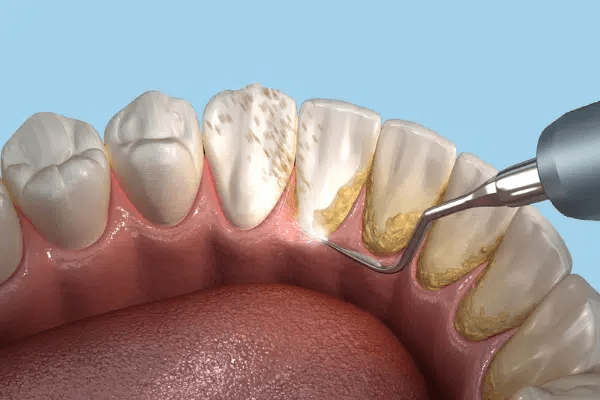

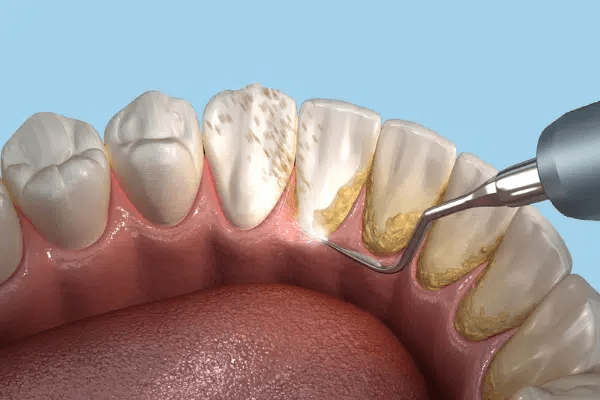

If you’ve ever typed “teeth cleaning near me” into a search bar, you’re definitely not alone. Keeping your smile bright and healthy is something we all want, but sometimes we

In today’s evolving interior design landscape, modern spaces demand more than just sleek furniture and muted palettes—they demand soul, texture, and authenticity. One element that beautifully marries tradition with contemporary aesthetics is the handmade

If you’ve got a bolster pillow at home (or thinking of getting one), you might be wondering — what’s the best fabric for those bolster covers? After all, a cover

Explore Colleges, Admissions & Exams in India

One of the key reasons a back pain doctor Clifton stands out is the personalized care they provide. Unlike many general practitioners or large medical centers that rely heavily on standardized protocols, a top back pain doctor in Clifton takes the time to understand the unique condition, symptoms, and lifestyle of each patient.

Explore why a react native app development company or trading software development company in Saudi Arabia can unlock digital growth efficiently.

A home is more than just walls and furniture—it’s a sanctuary, a reflection of your values, and for many, a place where faith resides. Christian home decor goes beyond aesthetics;

Discover top-rated interior design companies Delhi offering bespoke residential and commercial solutions. Transform your space with expert designers and innovative decor ideas.

The Global Medical Grade N95 Protective Masks Market is anticipated to rise at a considerable rate during the forecast period, between 2025 and 2033. In 2024, the is growing at a steady rate and with the rising adoption of strategies by key players, the is expected to rise over the projected horizon.

A good night’s sleep starts with the right sleepwear—and in 2025, comfort is no longer the only criterion. Women today want their nightwear to be stylish, breathable, and versatile enough

Running a small or medium-sized business in Perth involves more than just offering a great product or service. Behind every successful business is a sound financial strategy. However, many business

BOPP (Biaxially-Oriented Polypropylene) film is a type of plastic film that has a wide range of applications in the packaging, labelling, and laminating industries.

Ranks rocket connects website owners with bloggers for free guest posting! Increase brand awareness and backlinks with strategic placements. But remember, quality content is key.